Sixth Edition

Music Authorship in AI + Human Collaborations

By Judith Finell

Technology has been expanding the dissemination, audibility, and

accessibility of music since the invention of the printing press in the fifteenth century.

With Thomas Edison and others, the advent of sound recordings revolutionized the

field, along with wax cylinders, phonographs, amplification, player pianos, radio,

digitization, and more. There is no question that machines have enabled the

growth and development of music well beyond the concert hall.

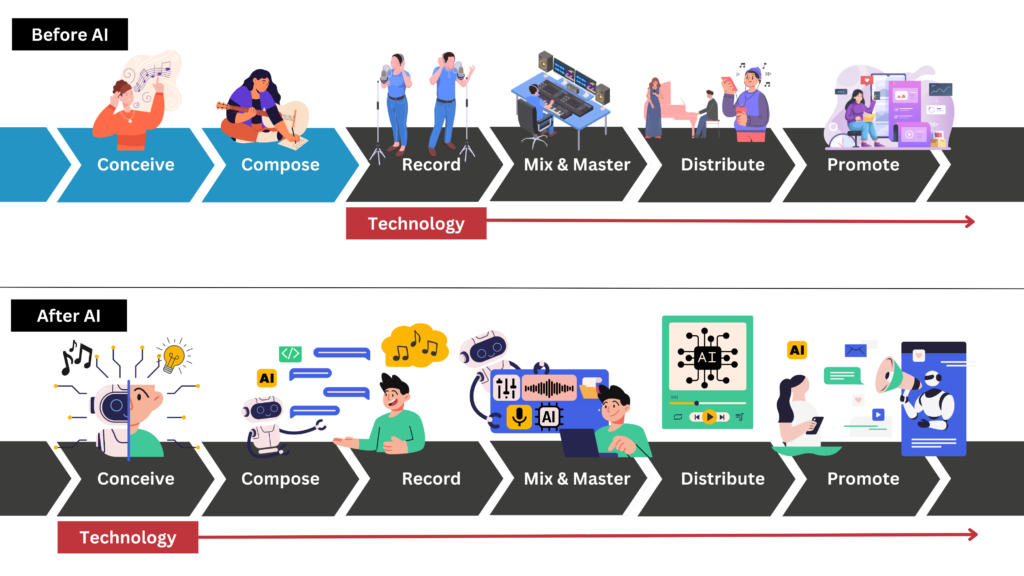

Until recent decades, technological tools have enhanced music’s reach

mostly AFTER a musical work has been created in full, rather than at the

beginning of the creative process. Today, with AI tools in their latest and most

accessible forms, we are now collaborating in the origination of music from its

conception. This development has the potential to disrupt the long-established protection system built on the sanctity of human authorship.

Before and After AI

Graphic created by Alice Deng

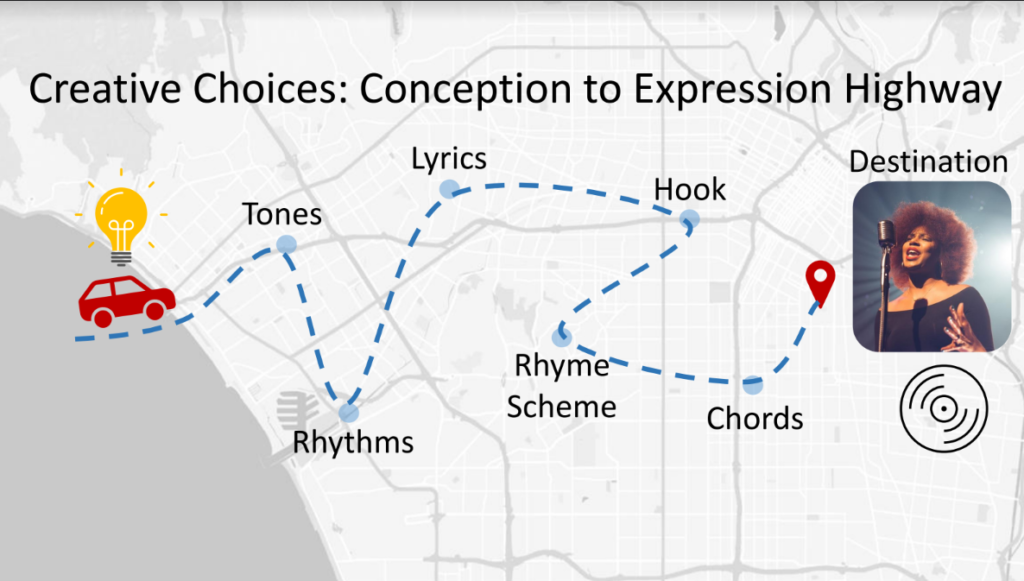

A musical composition is an organization of sound, resulting from a process

beginning with an initial idea and ending with the expression of that idea in a full

musical composition. Copyright protects the expression of the idea, not the idea

itself. For example, an idea alone would be a love song about first love and a

broken heart. The expression of that idea would be the specific lyrics conveying

the story line, melodies, and harmonies written to fulfill that idea.

Composers make hundreds of individual creative choices along the road

toward completion. These choices are protected by music copyright. Specific

creative choices including the tones, rhythms, harmonies, and lyrics of musical

works are currently the fundamental features that copyright protects in musical

compositions. After a musical composition has been written, it can be performed,

arranged, produced, and recorded, with these manifestations and/or derivations

also protected by copyright. It has been historically at this post-composition point

that technology has entered to partner with the composer to facilitate the

performance, arrangement, realization, and recording of the composition.

We are now embarking from a new starting point for the collaboration of

humans and machines at the outset of the compositional process itself with AI.

The legal protection and music licensing system was established to protect

human creators, not machine or animal creators.1 However, as new generations

of AI music creations emerge becoming less reliant on previous protected musical works, the system faces new challenges requiring a reconsideration of authorship, controls, fair use, and other legal doctrines as old as the US Constitution.

The music industry has begun to respond to this boldly in the halls of justice and legislation, designing new regulations to curb infringement. While the idea/expression dichotomy and the human/machine dichotomy are well-established, identifying the contributions of human versus machine is a new frontier. How will a musicologist assist in the analysis, evaluation, determination and testimony before a judge and jury on machine-assisted musical compositions, and will the criteria change in determining infringement?

As a musicologist, I have developed a methodology in analyzing and comparing music for substantial similarity based on a hierarchy of musical elements as protected by copyright law. This hierarchy would likely continue to apply to machine-assisted musical compositions if music fundamentally remains the organization of sound and copyright continues to protect the affixation of this expression. The musical elements covered by compositional copyright protection would likely still require comparison in disputes regardless of how the music was created, and the models used to build it. In the end, if traditional copyright law is applied, then melodic pitch, rhythm, harmony, lyrics, and other elements of musical expression will still drive the discussion.

In addition, however, generative AI music poses a new layer of consideration in comparing musical works for possible copyright infringement. This new layer would be to distinguish between the human creator and the machine creator in cases of collaborative works. For example, if the lyrics were created by AI, while the melody/harmonies by a human creator, the musicologist may be asked to distinguish between them and possibly to disregard any machine-made elements, while considering the interrelation of compositional features by humans and machines. Thus, an argument may also be raised in terms of the musical influence that the AI-created components had over the human-created elements, as melodic features in vocal works, for example, are impacted by word length, syllabic divisions, and phrasing.

In the current music ownership system, human collaborators typically co-own and control the use and income as equal partners, such as a writer/lyricist partnership, or a band of four members. However, if AI is an acknowledged participant in the collaborator group, who will control the monetization, distribution, income splits, and subsequent remixes/arrangements of the music?

Four questions are likely to arise for music owners and their attorneys.

Question 1 : What portion of the musical composition is protected, monetized, shared, and worthy of an infringement claim?

The potential for AI to be an equal collaborator with a human composer exposes the participants to both risks and rewards. Musical works carry two copyrights in the American copyright system: (a) composition (the “underlying work”) and (b) recordings. For the composition copyright, the elements normally include melodic pitch, rhythm, lyrics, and harmony. In the recording copyright, these elements include the specific performance, arrangement, and recording production traits, along with the composition itself.

If a musical composition results from a collaboration between a human and machine, then the traditional filter that a musicologist applies to divide the underlying composition from the performance/recording of it, might need to expand to allow for another division between human and non-human compositional elements. Further, because AI-generated music is often built on machine learning of protected previous musical works, sources in prior art, including within the public domain, may need to be added to the equation. This filtration of prior art has long remained a cornerstone of the extrinsic test and will likely remain so.

Question 2: Who owns the music in interactive musical compositions?

New AI developments include the creation of interactive composing/collaboration in real time. There are now musical installations in which musical works are playing, and audiences and members of the public can alter them during the performance, resulting in newly created compositions.

Another way that AI is impacting the modification of underlying musical works is with hyperinstruments,2 a musical type of haptics. These instruments were invented by composer Tod Machover of the MIT Media Lab, and involve a computer measurement system of the movements, bodily responses, and sonic traits during a musician’s live performance, facilitating changes in the music determined by virtuosic musicians during a performance. This technology allows a performer to extend beyond the capabilities of a traditional instrument, for example, or a vocalist to extend beyond specific vocal ranges or techniques spontaneously during the performance, and alters the original musical composition with potentially new pitches, rhythms, and other traits.

With interactive music, who owns the newly created work, given its original source? The musicologist may be asked to dissect the new works at issue in a new way, distinguishing between the original underlying composition, and the newly added third-party features. This convergence of compositional, performance, and production aspects poses further challenges to a musicologist’s role in determining the nature and origin of musical features at issue.

Question 3: What are the criteria for originality and its significance in machine-generated music?

The most startingly original musical works often stand apart because they depart radically from musical tradition and norms of the time. A dramatic example of this was when Igor Stravinsky’s masterpiece The Rite of Spring caused a public outcry after its 1913 premiere in Paris, partly due to its revolutionary sounds and choreography. Creative genius and originality vary widely in degree, but as machine-generated music is built on learning from the patterns of pre-existing musical models, a reasonable prior art defense may be asserted in a copyright infringement dispute – with access challenges based not on human exposure but machine learning. If machine learning and composition stems from replicating aspects of previous works in the same genre, for example, this could lead to challenges in originality.

Human-created musical works are often authentically original because they express the innermost personal thoughts, experiences, imagination, and feelings of their creators. To the extent that machine-learning is based on an attempt to replicate or imply previous works and their chosen specific traits, narratives, and emotional messages, this would likely raise questions about originality, access, and copying.

References to earlier works, and evoking them, are well within the tradition of musical literature. Certainly, great composers, including Brahms and Mozart, have written full-scale concert variations of melodies based on previous works by others. Even earlier, J.S. Bach was often defined as a great consolidator of the music that came before him, albeit a highly distinctive one. Further, student composers have traditionally begun their study by immersing themselves in the music of their predecessors. Still, machine learning may be seen as moving beyond acceptable levels of modeling.

Question 4: How will deciding judges and juries determine if copyright infringement has occurred?

In a typical copyright dispute, a musicologist is often asked to compare the specific musical elements in two musical works and convey an evaluation to a judge and jury who apply the “lay listener” test. In the case of AI-generated music, or AI-human collaborative music, how will a jury of untrained listeners distinguish between the sources of the sounds they are hearing, and how will they draw a reasonable conclusion on infringement using the appropriate criteria?

It is possible that jury selection and judge’s instructions to the jurors will take the new developments into consideration, and that additional experts including data scientists, statisticians, and technologists will influence the outcome in these disputes going forward. If so, juries may be expected to evaluate new factors in the determination of copyright infringement beyond the more obvious and audible musical similarities and differences to which they have heretofore been asked to respond.

We have entered a new frontier of musical creation and innovation. It is likely to be a revolutionary decade in musical invention and expression. The protection of creativity amidst the rapidly changing playing field races to keep pace while embracing new collaboration models in the spirit of human progress.

Endnotes

- Naruto v. Slater 888 F. 3d 418 (9th Cir. 2018). ↩︎

- For a discussion of “hyperinstruments,” see https://opera.media.mit.edu/projects/hyperinstruments.html ↩︎

The author thanks Dr. Geoffrey Pope and Tara Tempesta for their assistance in preparing this article.

Judith Finell is a musicologist and the president of Judith Finell MusicServices Inc., a music consulting firm in New York and Los Angeles, founded 25 years ago in New York. Since then, she has served as consultant and expert witness involving music copyright infringement, advised on artist career and project development, and a wide variety of music industry topics. Recently, Ms. Finell was honored to be the 2018 commencement speaker at UCLA’s Herb Albert School of Music. She was also interviewed by NBC/Universal for a 2018 documentary entitled “The Universality of Music,” in which she discussed the ways in which she sees music as being an international language that can bridge cultural barriers that spoken language does not. Judith Finell was the testifying expert for the Marvin Gaye family in the milestone “Blurred Lines” case in Federal Court. She has testified in many other notable copyright infringement trials over the past 20 years. She and her team of musicologists regularly advise HBO, Lionsgate, Grey Advertising, CBS, Warner, Disney, and Sony Pictures on musical works for their commercials, films, and television series. Ms. Finell also frequently advises attorneys, advertising agencies, entertainment and recording companies, publishing firms, and musicians, addressing copyright issues, including those arising from digital sampling, electronic technology and Internet musical usage. Ms. Finell was invited to teach forensic musicology at UCLA in 2018, where she continues to teach the only such course in the country. She holds an M.A. degree in musicology from the University of California at Berkeley and a B.A. from UCLA in piano performance. She has written numerous articles and a book in the area of contemporary music and copyright infringement and has appeared in trials on Court TV and before the American Intellectual Property Law Association. She is a trustee of the Copyright Society of the U.S.A., and has appeared as a guest lecturer at the law schools of Harvard University, UCLA, Stanford, Columbia, Vanderbilt, George Washington, NYU, and Fordham, as well as the Beverly Hills Bar Assn., LA Copyright Society, and the Association of Independent Music Publishers. She may be reached by email at judi@jfmusicservices.com.

© 2024 Your Inside Track™ LLC

Your Inside Track™ reports on developments in the field of music and copyright, but it does not provide legal advice or opinions. Every case discussed depends on its particular facts and circumstances. Readers should always consult legal counsel and forensic experts as to any issue or matter of concern to them and not rely on the contents of this newsletter.